One of the new features in SQL Server 2016 is Polybase, the engine that provides access to Hadoop through T-SQL commands. Polybase has been present for several releases of Microsoft APS (Analytical Platform System). For details, see the APS instruction manual, which you can download at the followinghttp://www.microsoft.com/en-us/download/details.aspx?id=45294

In this article we will describe how to install this feature, its configuration, and its operation through T-SQL query. The environment used is comprised of two virtual machines, one for SQL Server 2016 and one for Hadoop. The SQL machine is a Windows Server 2012 R2 with 8GB of RAM and 4 virtual cores, while the Hadoop machine is a CentOS system with 4GB of RAM and 2 virtual processors. The Hadoop virtual machine was downloaded from the Hortonworks website. This is the HDP Sandbox 2.2.4 (see http://hortonworks.com/products/hortonworks-sandbox/ ).

Installation

Some minimum system requirements need to be met to be able to install Polybase:

- 64-bit SQL Server Evaluation edition

- Microsoft .NET Framework 4.0.

- Oracle Java SE RunTime Environment (JRE) version 7.51 or higher.

- Minimum memory: 4GB

- Minimum hard disk space: 2GB

After installing Polybase and activating the corresponding “instance feature” during installation, we can find between Windows services two services dedicated to it:

- SQL Server Polybase Data Movement, which creates, coordinates, and executes the parallel plans

- SQL Server Polybase Engine, which transfers data between the external sources and SQL Server.

Installing Polybase also creates three database in SQL Server:

- DWConfiguration

- DWDiagnostics

- DWQueue

These databases are listed in the documentation as databases for internal use.

Configuration

In the folder “Polybase/Hadoop/Conf” there are some configuration files:

- Core-site.xml

- Hdfs-site.xml

- hive-site.xml

- Mapred-site.xml

- Yarn-site.xml

The files contain configuration options relating to, for example, security (using Kerberos), or block size, or, as regards yarn, the classpath for applications (yarn.application.classpath), which happens to be a necessary configuration for the connection to YARN-based clusters.

In our case, the classpath is the following:

$HADOOP_CONF_DIR,/usr/hdp/current/hadoop-client/*,/usr/hdp/current/hadoop-client/lib/*,/usr/hdp/current/hadoop-hdfs-client/*,/usr/hdp/current/hadoop-hdfs-client/lib/*,/usr/hdp/current/hadoop-yarn-client/*,/usr/hdp/current/hadoop-yarn-client/lib/*

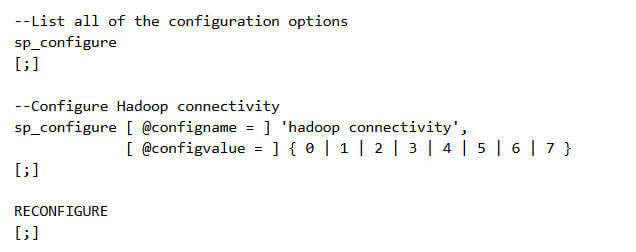

To use Polybase you must configure Hadoop connectivity through the following T-SQL command:

The possible values for @configvalue are listed below:

- 0: Disable Hadoop connectivity

- 1: Hortonworks HDP 1.3 on Windows Server

- 1: Azure blob storage (WASB[S])

- 2: Hortonworks HDP 1.3 on Linux

- 3: Cloudera CDH 4.3 on Linux

- 4: Hortonworks HDP 2.0 on Windows Server

- 4: Azure blob storage (WASB[S])

- 5: Hortonworks HDP 2.0 on Linux

- 6: Cloudera 5.1 on Linux

- 7: Hortonworks 2.1 and 2.2 on Linux

- 7: Hortonworks 2.2 on Windows Server

- 7: Azure blob storage (WASB[S])

If you want to configure the preinstalled virtual machine provided by Hortonworks, we need to use the following command:

The change in the pattern requires the RECONFIGURE command and restarting the two Polybase services.

How to use Polybase

To use Polybase and execute T-SQL queries we need to take the following steps:

- Create an external data source

- Create a file format

- Create an external table linked to Hadoop data

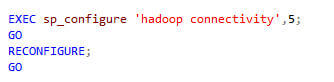

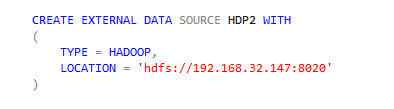

The creation of an external source is done with the following command:

The only type of external data source currently supported is HADOOP. However the location can be either a HDFS path or an Azure blob storage path.

By specifying the RESOURCE_MANAGER_LOCATION option, the optimizer can choose to execute the query through a MapReduce job, creating a logical pushdown predicate.

We can then create the external source, specifying the IP address of the Hadoop machine with port 8020:

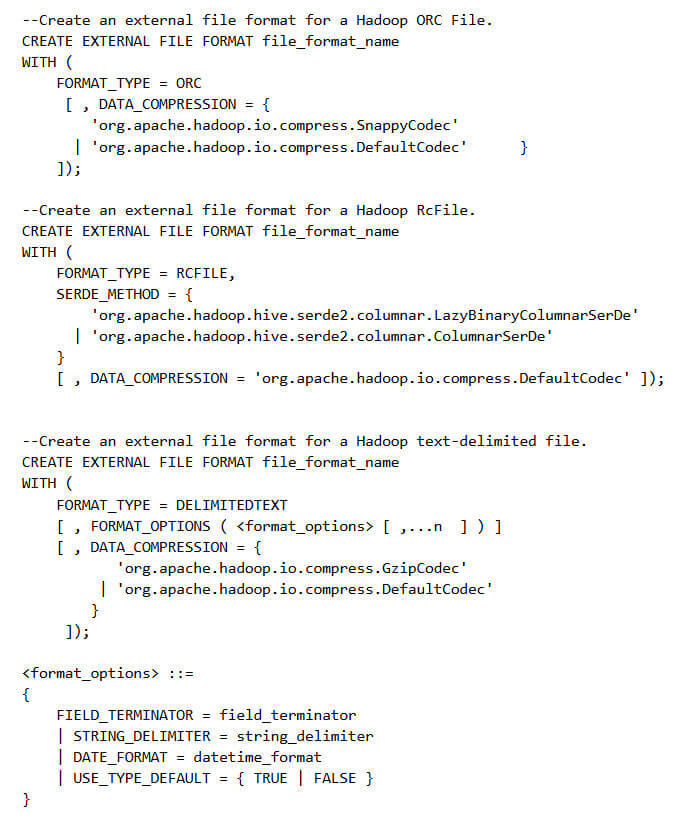

The next step is to create a “file format”, necessary to read data from Hadoop. The formats currently supported by Polybase are:

- Delimited text

- Hive RCFile

- Hive ORC

The three syntaxes are listed below:

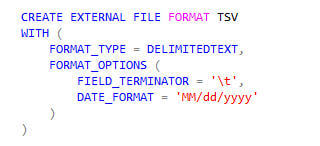

We use the text format, through the T-SQL command included in the image below:

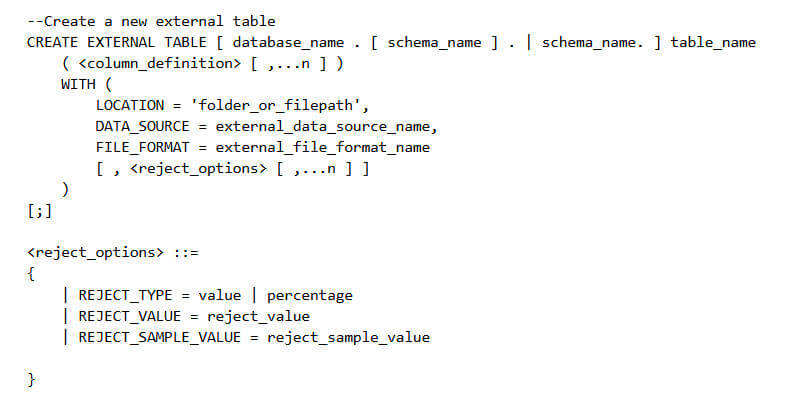

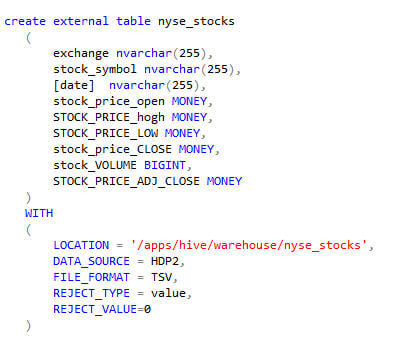

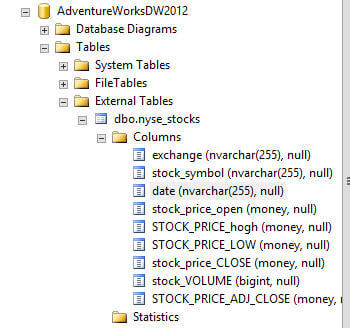

We are finally ready to create the external table. The CREATE EXTERNAL TABLE command requires the name of the table to create, the columns with the various data types, the reference to external data, the format of the external file.

For more details see https://msdn.microsoft.com/en-us/library/dn935021%28v=sql.130%29.aspx

There is also the possibility to force the creation of statistics on external data through the CREATE STATISTICS command. In general, the optimizer automatically creates statistics but in some cases it may be necessary to create them manually. Seehttps://msdn.microsoft.com/en-us/library/ms188038%28v=sql.130%29.aspx

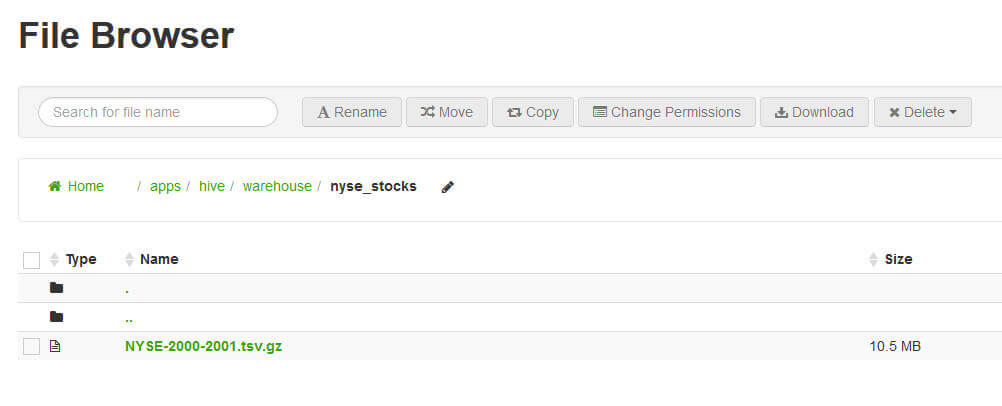

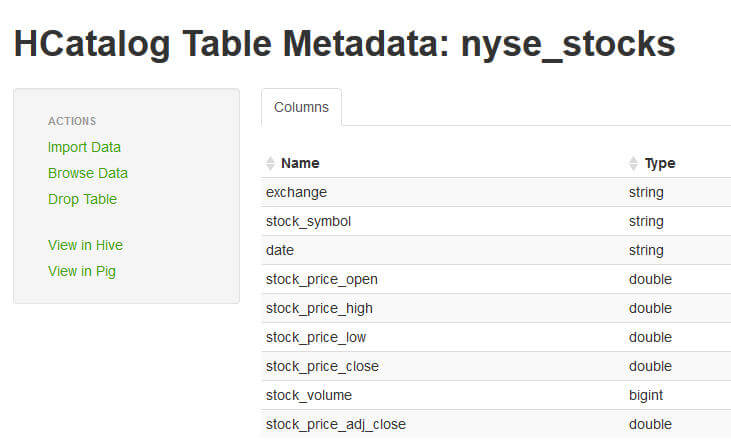

For the creation of the outer table, we can use a file containing the stock prices of NYSE. The images below show the location of the file in Hadoop and the HCatalog table created in the system:

We then create this table:

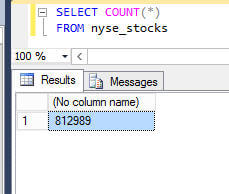

Let’s do some queries. First, we can count the rows of the outer table:

The query takes 3 seconds to run.

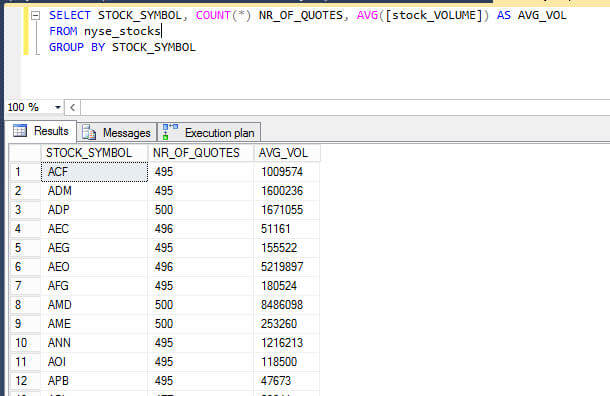

Another example: we can count the number of rows for each action and calculate the average trading rate.

In this case the rows that returned were 1734 and the time employed was 7 seconds.

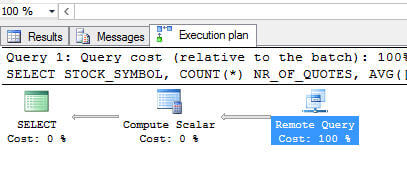

The execution plan in both cases is very simple. This shows how the query was solved, in remote, by Polybase.

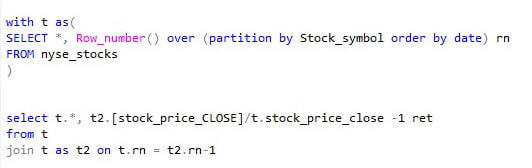

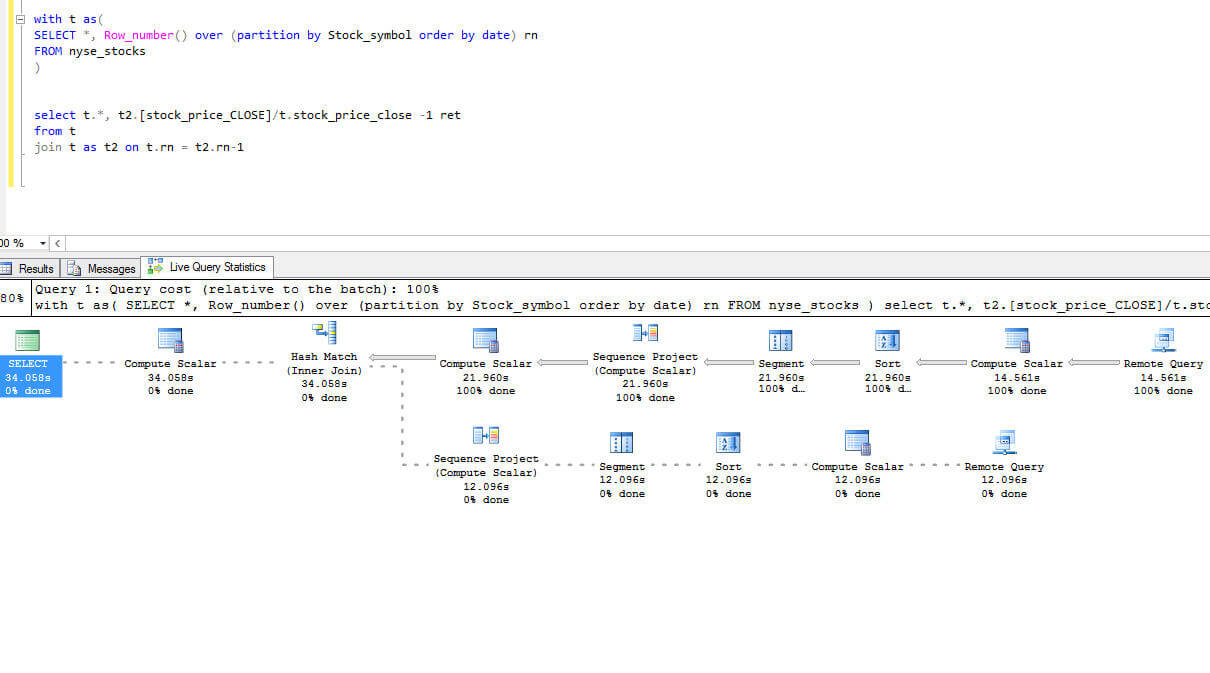

Let’s try a more complex query, in which we calculate the daily change in the price of each share.

In this case the execution plan shows how the query was partially resolved on the remote system (HDP) and in part locally.

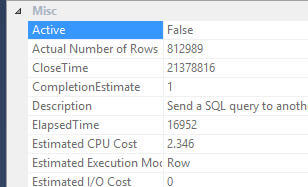

The properties for the first remote query shows a number of lines equal to 812,989, showing that the part run on Hadoop is limited to extracting data.

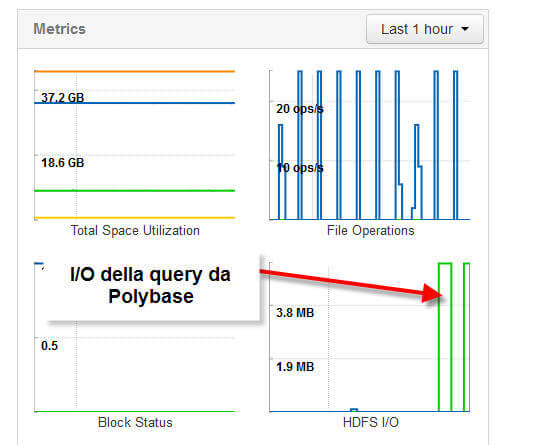

Now let’s see what effect the query, Hadoop side, has in terms of I/O. Using Ambari we can monitor the situation, activating the metrics recording service. In the case of the query we considered before, we have two peaks of I/O of reading in correspondence of the query. This means that the query in CTE is performed twice in Hadoop.

Conclusions

Polybase allows you to access Hadoop data through a T-SQL interface. Currently, in the CTP2, the features are still limited (e.g. it is impossible to write data to Hadoop). In any case, it is a very interesting and powerful tool to set a data lake-type architecture.

Leave A Comment